接上篇的 Volley 源码解析,目前项目中更多的用到的是 OkHttpUtils 和 OkHttp 所以有必要了解它的原理,以便遇到网络相关的问题时,可以及时的定位并解决问题,下面就开始吧。

本文的目录大致是这样:

- OkHttp 的基本使用

- OkHttp 的源码解析(V3.5.0)

- OkHttp 连接池复用

- OkHttp 的优缺点

OkHttp 的基本使用

在 gradle 中添加依赖

1 | compile 'com.squareup.okhttp3:okhttp:3.5.0' |

1.首先创建OkHttpClient

1 | OkHttpClient client = new OkHttpClient(); |

2.构造Request对象

1 | Request request = new Request.Builder() |

3.将Request封装为Call

1 | Call call = client.newCall(request); |

4.根据需要调用同步或者异步请求方法

1 | //同步调用,返回Response,会抛出IO异常 |

同步调用会阻塞主线程,一般不用

异步调用的回调函数是在子线程,我们不能在子线程更新UI,需要借助于runOnUiThread()方法或者Handler来处理

post 也是类似的, 相信大家都会用使用,接下来我们来看重头戏-源码。

OkHttp 源码解析

okHttpClient

首先来看,我们进行网络请求时使用的方法

1 | Call call = okHttpClient.newCall(request); |

RealCall

实际上的 Call 的 enqueue 调用的是 RealCall的 enqueue方法

1 | call.enqueue(new ...); |

下面我们看下 RealCall的 enqueue是如何实现的

1 | public void enqueue(Callback responseCallback) { |

可以看到最终的请求处理是 dispatcher 来完成的,接下来看下 dispatcher

dispatcher

1 | //最大并发请求书 |

Dispatcher 有两个构造方法,可以自己指定线程池, 如果没有指定, 则会默认创建默认线程池,可以看到核心数为0,缓存数可以是很大, 比较适合执行大量的耗时比较少的任务。

接着看 enqueue是如何实现的

1 |

|

当正在运行的异步请求队列中的数量小于64, 并且 正在运行的请求主机数小于5,把请求加载到runningAsyncCalls 中并在线程池中执行, 否则就加入到 readyAsyncCalls 进行缓存等待。

runningCallsForHost是如何实现的呢

1 |

|

正在执行的网络请求中 同一个host最多只能是5个。

上面可以看到传递进来的是 AsyncCall 然后 execute 那我们看下 AsyncCall方法

1 | final class AsyncCall extends NamedRunnable { |

看到 NamedRunnable 实现了 Runnable,AsyncCall 中的 execute 是对网络请求的具体处理。

1 | Response response = getResponseWithInterceptorChain(); |

能明显看出这就是对请求的处理,在看它的具体实现之前先看下 client.dispatcher().finished 的方法实现。

1 | /** Used by {@code AsyncCall#run} to signal completion. */ |

由于 promoteCalls 是true 我们看下 promoteCalls 的方法实现

1 |

|

根据代码可以明显看出 , 当一个请求结束了调用 finished 方法,最终到promoteCalls就是把 异步等待队列中的请求,取出放到 异步执行队列中。

- 如果异步执行队列已经是满的状态就不加了,return

- 如果 异步等待队列中 没有需要执行的网络请求 也就没有必要进行下一步了 return

- 上面的两条都没遇到,遍历 异步等待队列,取出队首的请求,如果这个请求的 host 符合 (正在执行的网络请求中 同一个host最多只能是5个)的这个条件, 把 等待队列的这个请求移除, 加入到 正在执行的队列中, 线程开始执行。 如果不符合继续 遍历操作。

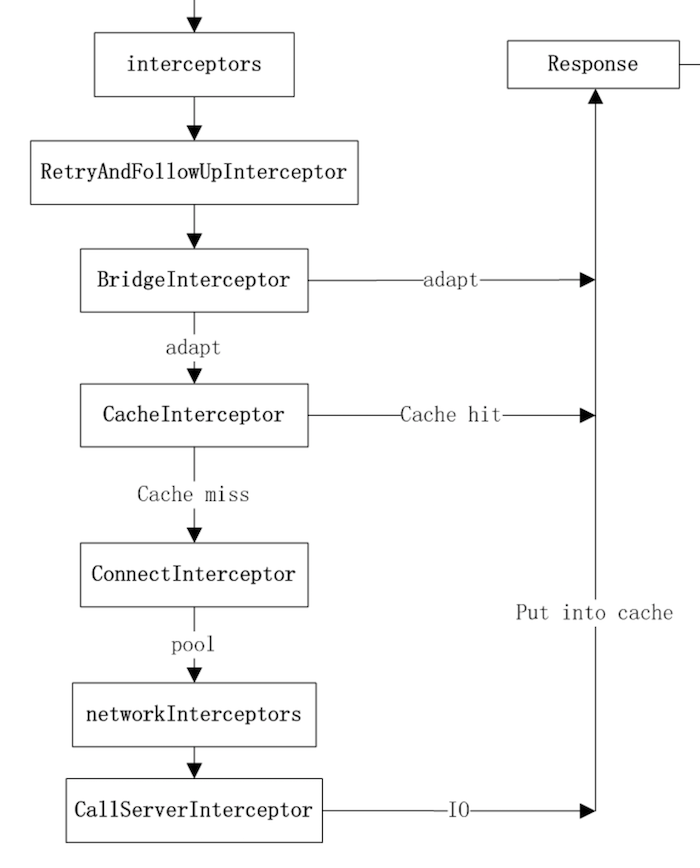

interceptors 拦截器

接着看 RealCall 的 getResponseWithInterceptorChain 方法

1 |

|

看下 RealInterceptorChain 的实现

1 |

|

根据上面的代码 我们可以看出,新建了一个RealInterceptorChain 责任链 并且 index+1,然后 执行interceptors.get(index); 返回Response。

责任链中每个拦截器都会执行chain.proceed()方法之前的代码,等责任链最后一个拦截器执行完毕后会返回最终的响应数据,而chain.proceed() 方法会得到最终的响应数据,这时就会执行每个拦截器的chain.proceed()方法之后的代码,其实就是对响应数据的一些操作。

接下来看下各个拦截器的具体代码

RetryAndFollowUpInterceptor

1 | public Response intercept(Chain chain) throws IOException { |

当发生 RouteException 和 IOException 都会进行 recover 重试。

BridgeInterceptor

1 | @Override public Response intercept(Chain chain) throws IOException { |

能看出 BridgeInterceptor 主要做的就是

在请求发出之前 把请求的 信息拿出来处理成Request.Builder.header 发送出去

当请求结果回来之后,处理header 信息。处理返回的信息。

缓存拦截器

CacheInterceptor

1 | @Override public Response intercept(Chain chain) throws IOException { |

如果用户自己配置了缓存拦截器,cacheCandidate = cache.Response 获取用户自己存储的Response,否则 cacheCandidate = null;同时从CacheStrategy 获取cacheResponse 和 networkRequest

如果cacheCandidate != null 而 cacheResponse == null 说明缓存无效清除cacheCandidate缓存。

如果networkRequest == null 说明没有网络,cacheResponse == null 没有缓存,返回失败的信息,责任链此时也就终止,不会在往下继续执行。

如果networkRequest == null 说明没有网络,cacheResponse != null 有缓存,返回缓存的信息,责任链此时也就终止,不会在往下继续执行。

然后

执行下一个拦截器,也就是请求网络

责任链执行完毕后,会返回最终响应数据,如果缓存存在更新缓存,如果缓存不存在加入到缓存中去。

ConnectInterceptor

1 | @Override public Response intercept(Chain chain) throws IOException { |

连接复用的逻辑就是这里面, 寻找可用的链接, 复用, 这个待会分析。

networkInterceptors

这个是自定义的网络拦截器

CallServerInterceptor

1 | @Override public Response intercept(Chain chain) throws IOException { |

OkhttpClient 实现了Call.Fctory,负责为Request 创建 Call;

RealCall 为Call的具体实现,其enqueue() 异步请求接口通过Dispatcher()调度器利用ExcutorService实现,而最终进行网络请求时和同步的execute()接口一致,都是通过 getResponseWithInterceptorChain() 函数实现

getResponseWithInterceptorChain() 中利用 Interceptor 链条,责任链模式 分层实现缓存、透明压缩、网络 IO 等功能;最终将响应数据返回给用户。

OkHttp 连接池复用

我们知道 OkHttp 支持5个并发 socket 连接,默认keepAlive 时间为5分钟。 那究竟是怎么做到的呢

在 ConnectInterceptor 中我们知道 newStream

1 | public HttpCodec newStream(OkHttpClient client, boolean doExtensiveHealthChecks) { |

从上面的分析,获取RealConnection的流程,总结如下:

在ConnectInterceptor中获取StreamAllocation的引用,通过StreamAllocation去寻找RealConnection

如果RealConnection不为空,那么直接返回。否则去连接池中寻找并返回,如果找不到直接创建并设置到连接池中,然后再进一步判断是否重复释放到Socket。

在实际网络连接connect中,选择不同的链接方式(有隧道链接(Tunnel)和管道链接(Socket))

把RealConnection和HttpCodec传递给下一个拦截器

在从连接池中获取一个连接的时候,使用了 Internal 的 get() 方法。Internal 有一个静态的实例,会在 OkHttpClient 的静态代码快中被初始化。我们会在 Internal 的 get() 中调用连接池的 get() 方法来得到一个连接。并且,从中我们明白了连接复用的一个好处就是省去了进行 TCP 和 TLS 握手的一个过程。因为建立连接本身也是需要消耗一些时间的,连接被复用之后可以提升我们网络访问的效率。

ConnectionPool

连接池的位于 ConnectionPool 中

1 | /** 空闲 socket 最大连接数 */ |

构造方法可以看到,空闲socket的最大连接数为5个,ConnectionPool是在 OkHttpClient 实例化时创建的。

1 | RealConnection get(Address address, StreamAllocation streamAllocation) { |

看下 put,get 方法,get 方法会遍历 connection 缓存列表, 当某个连接计数小于限制的大小,并且 request 的地址和缓存列表中此链接的地址完全匹配时, 则直接复用缓存列表中的 connection 作为request 的连接。

上面可以看到 put 方法会调用清理线程。

1 | private final Runnable cleanupRunnable = new Runnable() { |

cleanup方法的过程是 根据连接中的引用计数来计算空闲连接数和活跃连接数,,然后标记出空闲连接数。

如果空闲连接keepAlive 时间超过5分钟,或者空闲连接数超过5个,则从Deque 中移除次连接,

如果空闲连接数大于0,则返回此连接即将到期的时间,如果都是活跃连接,并大于0,则返回5分钟。 如果没有任何连接,则返回-1,

清除算法,使用类似GC中的引用计算算法,如果弱引用StreamAllocation列表为0,则表示空闲需要进行回收。

可以看出连接池复用的核心就是用 Deque

OkHttp 的优缺点

优点:

- 1、支持 HTTP/2,允许连接同一主机的所有请求分享一个 socket。 如果 HTTP/2 不可用,会使用连接池减少请求延迟。

- 2、使用GZIP压缩下载内容,且压缩操作对用户是透明的。

- 3、利用响应缓存来避免重复的网络请求。

- 4、如果你的服务端有多个IP地址,当第一个地址连接失败时,OKHttp会尝试连接其他的地址,这对IPV4和IPV6以及寄宿在多个数据中心的服务而言,是非常有必要的。

- 5、用户可自主定制拦截器,实现自己想要的网络拦截。

- 6、支持大文件的上传和下载。

- 7、支持cookie持久化。

- 8、支持自签名的https链接,配置有效证书即可。

- 9、支持Headers的缓存策略减少重复的网络请求。

缺点:

- 1、网络请求的回调是子线程,需要用户手动操作发送到主线程。

- 2、参数较多,配置起来复杂。

所以综合上面的缺点,OkHttpUtils 及类似的 封装应用而生。下一篇我们来通过 OkHttpUtils源码解析 看下是如何封装并解决这些问题的。